Hook: why single-model testing no longer cuts it

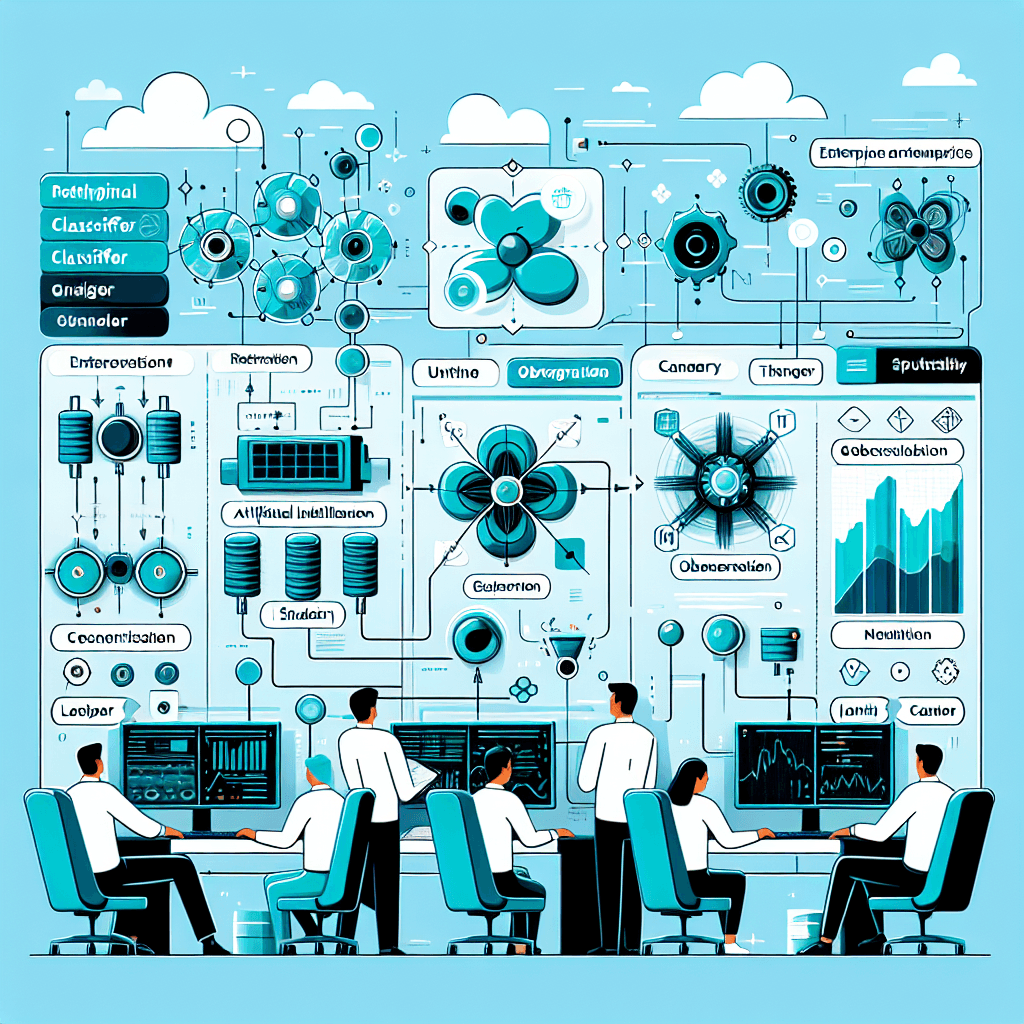

Modern enterprise AI systems are rarely one model doing one job. By 2025, many organizations run multi-agent or multi-model pipelines—retrieval components, NER models, policy engines, and generative models—working together. When components interact, failures compound: a small drift in an upstream classifier can trigger hallucinations downstream, or a latency spike in a ranking model can break SLAs. If your testing strategy still treats models in isolation, you're missing the real failure modes.

Why multi-model testing matters

Testing multi-model systems means validating not only model quality but composition, orchestration, latency, safety, and compliance. Empirically, enterprises adopting multi-agent architectures (about 73% in recent industry surveys) are increasing their focus on integrated QA workflows. The reasoning is simple: interactions introduce emergent behaviors that single-model metrics don't capture.

"Testing must move from model-level checks to system-level observability: it's the only way to surface emergent faults before they reach users."

Core testing types for multi-model systems

1. Unit and component tests

Test each model or component with targeted datasets and metrics: classification F1, NER span F1, hallucination rate for generators, and robustness to input perturbations. Keep these in CI/CD as fast, deterministic checks.

2. Integration and flow tests

Evaluate model composition: does the NER + intent classifier + policy engine produce sensible dialog flows? Use scenario-driven tests that capture common business processes (e.g., billing disputes, password resets).

3. Scenario and acceptance tests

Write user-centric scenarios that combine multiple models and external systems. These tests validate business outcomes, not just intermediate model scores.

4. Adversarial and stress testing

Apply targeted attacks and high-load conditions to expose vulnerabilities: prompt injection, data poisoning proxies, or throughput saturation. These uncover emergent failures and safety gaps.

5. Shadow and canary testing

Run candidate models in shadow mode against production traffic (non-acting) and roll out via canary release or blue/green deployment to validate behavior on real inputs with low risk.

6. Continuous monitoring and drift detection

Production monitoring closes the loop. Detect data and concept drift, monitor fairness metrics, latency P95/P99, and domain-specific safety indicators. Trigger retraining or rollback when thresholds breach.

Implementing a multi-model test pipeline

Here’s a pragmatic pipeline that scales to enterprise complexity:

- Pre-commit: fast unit tests for model artifacts and linters for prompts/configs.

- CI: run component training smoke tests, small evaluation suites, and static checks for model card metadata and governance tags.

- Integration stage: scenario-driven tests combining models and mocked downstream services.

- Staging (shadow): replay a sample of production traffic; run adversarial and stress tests here.

- Canary/production: staged rollout with telemetry, drift, and bias monitors. Enable automated rollback rules for key metrics.

- Post-deploy: continuous observability dashboards and periodic synthetic tests for edge cases.

Automate as much as possible: automated retraining pipelines, metric-based gating in CI/CD, and alerting for policy violations shorten detection and remediation loops.

Concrete example: customer support agent

Imagine an enterprise support assistant composed of a retrieval system, intent classifier, NER, and a generator. A good testing plan would include:

- Unit: named entity F1 > 0.9 on validation set; intent classifier accuracy > 92%.

- Integration: full conversation flows that simulate billing inquiries—assert the assistant suggests refund paths 95% of the time when applicable.

- Adversarial: injection of ambiguous or malicious prompts—assert hallucination rate < 1% and that the agent refuses unsafe tasks.

- Shadow: replay recent support queries to compare new vs. baseline responses; flag regressions in satisfaction proxies.

- Production: monitor resolution rate, escalation rate, latency P95, and content safety metrics continuously.

Operational trade-offs and governance

Testing multi-model systems increases infrastructure and test-data costs and requires specialized skills. Trade-offs include:

- Cost vs. coverage: full adversarial and stress test suites are expensive but reveal critical risks.

- Automation vs. human review: some safety checks still need human-in-the-loop evaluation for nuance.

- Data privacy vs. realism: production traffic is best for realism but may require anonymization to meet compliance.

"Investing in observability and staged rollouts pays for itself by preventing costly incidents and enabling faster, confident releases."

Actionable takeaways

- Start with a layered test plan: unit, integration, scenario, adversarial, and production monitoring.

- Integrate tests into

CI/CDwith metric gates and automated rollback rules. - Use a mix of synthetic and production-shadow datasets to cover edge cases without compromising privacy.

- Adopt staged deployments (

canary release,blue/green) and enforce observability for emergent behavior. - Measure what matters: safety indicators, hallucination rate, fairness metrics, latency P95/P99, and business KPIs.

Conclusion — ship smarter, not just faster

Multi-model testing is not a one-off project but a discipline. It mixes engineering rigor, adversarial thinking, and operational observability. Enterprises that invest in layered testing, integrate evaluation into their CI/CD pipelines, and use staged rollouts will trade upfront complexity for lower operational risk and faster, more reliable innovation.

Ready to move beyond single-model checks? Start by mapping your model interactions, prioritize the flows that matter to business outcomes, and add continuous monitors that can automatically gate or roll back releases. Testing at scale is achievable—if you treat it as system engineering, not just statistics.

Call to action: audit one critical multi-model flow this week—define three scenario tests, add one shadow-replay run, and add a metric-based canary gate in your pipeline. Observe what you catch; iterate from there.