The Vision Model Dilemma: More Choice, More Complexity

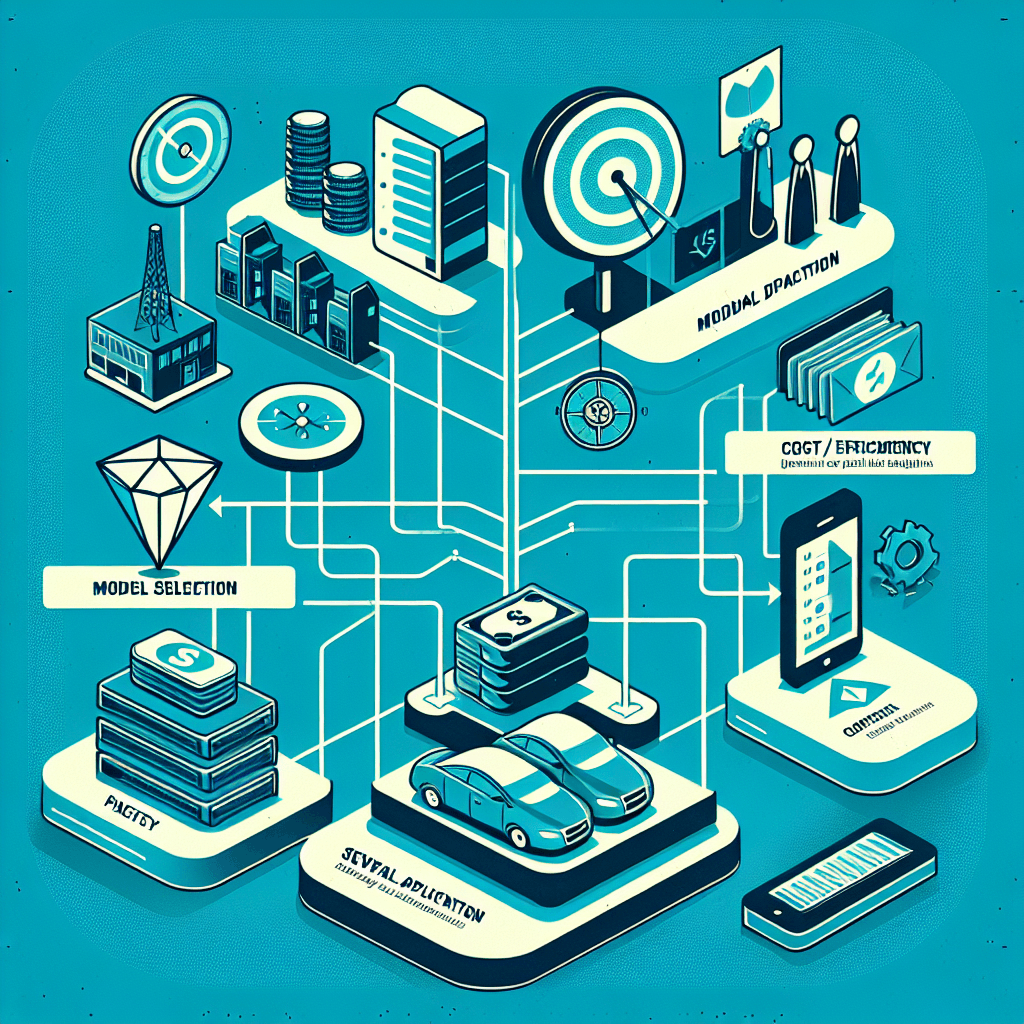

You have a brilliant idea for an AI application that needs to understand the visual world. Maybe it's an automated quality inspector for a manufacturing line, a smart document processor, or an interactive assistant that can answer questions about a live video feed. The excitement is palpable—until you hit the model selection phase. The landscape in 2026 is a dizzying array of options: Google's Gemini ecosystem with eleven distinct models, open-source powerhouses like Qwen2-VL, and a growing fleet of efficient Small Vision-Language Models (SVLMs). The benchmark leaderboards are a constant shuffle. How do you possibly choose?

Picking the right Vision Language Model is about more than just performance metrics—it's about how your own needs can be met, considering the strengths of the model you pick.

This post cuts through the noise. We'll move beyond the hype of headline-grabbing scores and provide a practical framework for selecting the vision model that fits your project's unique constraints and goals.

Understanding the Modern Vision Model Landscape

First, let's clarify what we're talking about. Modern Vision Language Models (VLMs) are a quantum leap beyond traditional computer vision models. They are multimodal, meaning they can jointly understand and reason about both images and text. This allows them to interpret complex scenes, answer detailed questions about visual content, and even process videos and documents with embedded text. This versatility is what makes them so transformative across industries.

The Key Players in 2026

The field has matured into distinct categories:

- The Ecosystem Play (e.g., Google Gemini): Providers like Google offer a suite of models covering every use case, from the heavyweight

Gemini 3 Profor complex reasoning to the lightning-fastGemini 3 Flashfor high-throughput tasks and the lightweightGemma 3for edge applications. This approach offers consistency and ease of integration within a single platform. - The Open-Source Challengers: The narrative has flipped.

The rise of large open-source models like Qwen2-VL-7B suggests open-source models are beginning to surpass their closed-source counterparts, including GPT-4o.

These models offer transparency, customization potential, and freedom from vendor lock-in, making them compelling for many enterprises. - The Efficiency Experts (SVLMs): Born from the need for lower latency and cost, Small Vision-Language Models provide capable performance in a package efficient enough for on-device or real-time applications, without requiring massive cloud GPU clusters.

Your Decision Framework: The Four Pillars of Selection

Forget picking the "best" model. Your goal is to find the most suitable one. Evaluate every candidate against these four pillars.

1. Performance & Accuracy

This is where most people start, often by looking at benchmarks like mean Average Precision (mAP) for detection or scores on VQA (Visual Question Answering) leaderboards. Here's the critical caveat:

Benchmarks should always be treated with caution as they are important but by no means the only reference for choosing the right model for your use case.A model that excels at identifying everyday objects in COCO may struggle with grainy, specialized X-ray images. Always ask: How representative are public benchmarks of my private, domain-specific data?

2. Speed & Latency

Is your application interactive? A customer-facing chatbot needs responses in under a second. A batch process analyzing thousands of overnight security footage can take hours. Inference speed, often measured in frames per second (FPS) or time-to-first-token, is a non-negotiable for real-time use cases. Visualizing the relationship between speed and accuracy is essential for identifying the "Pareto frontier"—the set of models that offer the best possible accuracy for a given speed constraint.

3. Efficiency & Cost

This encompasses parameter count, memory footprint, and computational requirements. A 70-billion-parameter model delivers stunning results but may be prohibitively expensive to run at scale. A 3-billion-parameter SVLM might be 95% as accurate for your task at 1/10th the cost. Parameter efficiency directly translates to your cloud bill or hardware requirements.

4. Ease of Deployment

How will you serve this model? Does it have readily available Docker containers, optimized inference engines (like TensorRT or ONNX Runtime), and community support? Can it run on your existing on-premise hardware, or is it only available as a cloud API? Deployment complexity is a hidden project killer.

Mapping Use Cases to Model Types

Let's apply this framework with concrete examples.

Scenario 1: Real-Time Industrial Inspection

Needs: Ultra-low latency (<100ms), high precision for specific defects, runs on factory-floor edge devices.

Priorities: Speed & Latency > Efficiency > Performance (on specific classes) > Deployment (edge-friendly).

Model Choice: A specialized, finely-tuned Small VLM (SVLM) or an efficient object detection model (like a YOLO variant) from the Pareto frontier that excels in speed/accuracy trade-off. A massive general-purpose VLM API would be too slow and costly.

Scenario 2: Research & Complex Document Analysis

Needs: Extract and reason over information from complex PDFs with charts, tables, and text.

Priorities: Performance (on document understanding) > Deployment (flexibility) > Efficiency > Speed.

Model Choice: A top-tier open-source VLM like Qwen2-VL-72B or a powerful API model like Gemini 3 Pro. The need for deep reasoning and handling novel formats justifies the higher cost and latency.

Scenario 3: Scalable Social Media Content Moderation

Needs: Classify millions of images daily for policy violations, balance accuracy with very high throughput.

Priorities: Efficiency (cost per image) > Speed (throughput) > Performance > Deployment (scalable APIs).

Model Choice: A fast, batch-optimized model like Gemini 3 Flash or an open-source model served on a cost-optimized GPU cluster. The focus is on total cost of ownership at scale.

Actionable Takeaways for Your Selection Process

- Start with Your Non-Negotiables: Write down your absolute limits for latency, cost per inference, and data privacy (cloud vs. on-prem). This will instantly eliminate half the field.

- Prototype with 2-3 Archetypes: Don't just test the benchmark leader. Try one large API model, one leading open-source model, and one efficient SVLM on a representative sample of your actual data.

- Measure What Matters to You: Create a small, custom evaluation set from your domain. Test the models on your specific tasks and measure the metrics that align with your business goal (e.g., "percentage of defects caught" vs. generic mAP).

- Consider the Total Cost of Ownership (TCO): Factor in not just inference cost, but also development time, MLOps overhead, and the flexibility to fine-tune or customize the model down the line.

Conclusion: The Right Model is the One You Don't Have to Fight

The most sophisticated model in the world is the wrong choice if it can't meet your latency requirements, blows your budget, or is impossible to deploy in your environment. In 2026, with both open and closed-source models achieving remarkable capabilities, the power has shifted to the practitioner.

The winning strategy is to be ruthlessly pragmatic. Define your constraints, understand the trade-offs on the Pareto frontier, and validate candidates against your real-world data. The "best" vision model isn't the one with the highest score on a leaderboard; it's the one that disappears into your application, working reliably and efficiently to solve your user's problem. Start your selection there.