You've integrated a Vision API into your application. It works beautifully in testing. Then production hits: accuracy drops on real-world images, costs spiral as traffic scales, and your compliance team raises questions about data residency. Sound familiar?

Computer vision in 2025 is no longer just about building classifiers—it's about integrating foundation models, interactive segmentation, on-device inference, and cloud-first APIs into reliable production pipelines. The gap between a working prototype and a production-ready system is where most teams struggle. Let's bridge that gap with patterns that actually work.

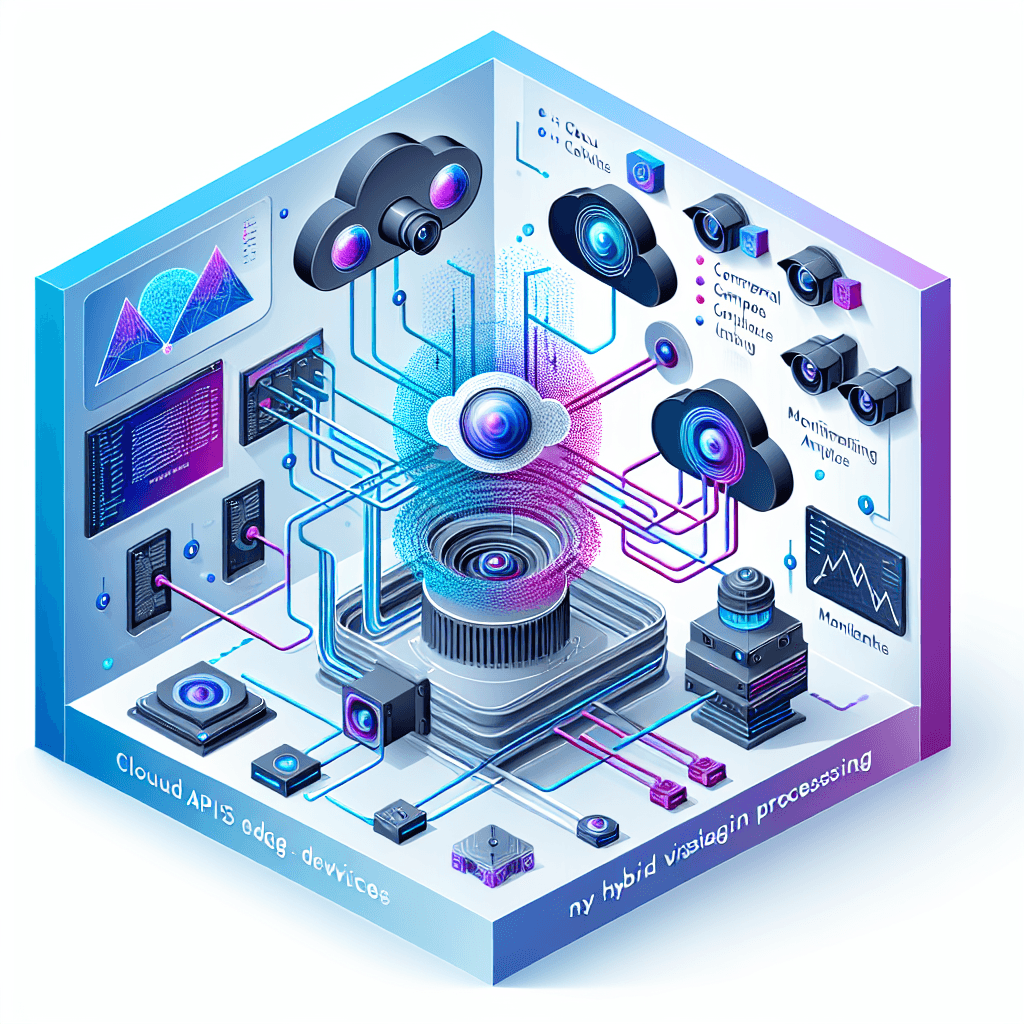

The Hybrid Architecture Pattern: Your Strategic Starting Point

The most successful companies aren't choosing between cloud APIs and custom models—they're strategically combining both. Here's the pattern that's emerged as the industry standard:

Start With Cloud APIs for Discovery

Begin with established Vision APIs like Google Cloud Vision, AWS Rekognition, or Azure Computer Vision for rapid prototyping. This approach gives you immediate capabilities: image labeling, face detection, OCR, object detection, and explicit content filtering—all without training a single model.

But here's the critical insight: treat this phase as intelligence gathering. You're not just building features; you're collecting data about where general-purpose APIs succeed and where they fail in your specific domain.

Monitor, Measure, and Identify Gaps

Implement robust logging from day one. Track:

- Confidence scores across different image types

- False positive and false negative rates by category

- API response times at different times of day

- Cost per prediction as volume scales

- Edge cases where the API struggles

For example, a medical imaging startup might discover that while Google Cloud Vision excels at detecting anatomical structures in standard X-rays, it struggles with specialized imaging techniques specific to their niche. This data becomes your roadmap for custom model development.

Build Custom Models for Specific Challenges

The goal isn't to replace your Vision API entirely—it's to augment it where your domain demands precision that general-purpose models can't deliver.

Focus your custom modeling efforts on:

- Domain-specific objects that general APIs don't recognize

- Cases where you need explainability for regulatory compliance

- Privacy-sensitive scenarios requiring on-device processing

- High-volume, low-complexity tasks where cost optimization matters

Production-Grade Evaluation: Beyond Accuracy Metrics

Here's an uncomfortable truth: your model's accuracy will degrade over time in production. It's not a question of if, but when and by how much.

Implement Continuous Evaluation

Production computer vision requires continuous evaluation, drift detection, and a retraining cadence built into your development lifecycle. This isn't optional overhead—it's the difference between a system that remains useful and one that quietly fails.

Set up automated monitoring for:

- Data drift: Are the images you're receiving changing over time? New camera models, different lighting conditions, or seasonal variations can all impact performance.

- Prediction drift: Are confidence scores trending downward? Are certain classes being predicted more or less frequently?

- Ground truth validation: Regularly sample predictions for human review to catch subtle accuracy degradation

Consider implementing a shadow deployment pattern where new model versions run in parallel with production, allowing you to compare performance before switching over.

Domain-Specific Accuracy Matters More Than Benchmarks

AI engines are trained with specific data, meaning some Vision APIs may perform better for images from the medical field while others excel with automotive imagery. That impressive benchmark score on ImageNet? It might be irrelevant for your use case.

Before selecting an API vendor, measure candidates against your specific datasets. Create a representative test set of 100-500 images that cover:

- Typical use cases (80% of your traffic)

- Edge cases (15% of scenarios)

- Known failure modes (5% stress testing)

Test each API candidate against this set and measure what matters: precision and recall for your specific classes, not generic benchmarks.

Optimizing for Edge Deployment

Not every prediction needs to happen in the cloud. Privacy requirements, latency constraints, or connectivity limitations often push computer vision to edge devices. But mobile and IoT devices have strict size and speed constraints.

Quantization: Your Primary Optimization Tool

Quantization can reduce a model's size by 4x and achieve 2-3x speedup, usually with low accuracy loss. The technique works by converting model weights from 32-bit floating point to 8-bit integers, dramatically reducing memory footprint and computational requirements.

Here's a practical workflow:

- Train your model at full precision

- Apply post-training quantization using tools like TensorFlow Lite or PyTorch Mobile

- Measure accuracy on your validation set

- If accuracy loss is acceptable (typically <2%), deploy the quantized version

- If not, try quantization-aware training, where the model learns to maintain accuracy despite quantization

Critical caveat: Always verify the optimized model's accuracy still meets your requirements. That 2% accuracy drop might be negligible for a content moderation system but unacceptable for medical diagnosis.

The Edge-Cloud Continuum

Design your architecture to optimize across edge and cloud based on performance and privacy needs. Consider this decision matrix:

- Process on-device when: Privacy is paramount, latency must be <100ms, or connectivity is unreliable

- Process in the cloud when: Model complexity exceeds device capabilities, you need access to the latest models, or you're aggregating insights across users

- Use a hybrid approach when: You can do quick classification on-device and detailed analysis in the cloud

Choosing the Right Vision API: A Decision Framework

With dozens of Vision APIs available, selection paralysis is real. Here's a practical framework for evaluation:

Four Critical Evaluation Criteria

1. Domain accuracy: As discussed, test with your specific data. Generic benchmarks don't predict real-world performance.

2. Compliance and governance: Does the vendor meet your data storage requirements? Are audit logs available? Can you prove GDPR, HIPAA, or industry-specific compliance?

3. Integration speed: Do they provide robust SDKs for your tech stack? Is documentation comprehensive? Can you prototype in days, not weeks?

4. Vendor continuity: Is this a core product or a side project? What's their track record with API versioning and deprecation? You're building for years, not months.

The cheapest API today might become the most expensive technical debt tomorrow if it doesn't scale with your needs or gets deprecated.

Cost Optimization Strategies

Vision API costs can surprise you at scale. A prediction that costs $0.001 seems trivial until you're processing millions of images daily.

Practical cost reduction techniques:

- Implement intelligent caching: For user-uploaded content, cache results by image hash to avoid reprocessing duplicates

- Use tiered processing: Run a fast, cheap classifier first to filter out obvious cases, then use expensive APIs only for uncertain predictions

- Batch when possible: Many APIs offer better pricing for batch processing if real-time isn't required

- Right-size your requests: Don't send 4K images if 1080p provides sufficient accuracy—bandwidth and processing costs both decrease

- Transition high-volume tasks to custom models: Once you have sufficient training data, the economics often favor custom models for repetitive tasks

Building for the Long Term

Computer vision systems in production require a different mindset than prototype development. You're building infrastructure that needs to remain reliable, accurate, and cost-effective as your business scales.

The hybrid approach—starting with cloud APIs, gathering intelligence, building custom models where needed, and optimizing deployment across edge and cloud—isn't just best practice. It's the pattern that separates systems that scale from those that crumble under production load.

Start simple, measure everything, and evolve based on data rather than assumptions. Your future self, debugging a production incident at 2 AM, will thank you for the monitoring you implemented today.

What's your next step? If you're still in prototype phase, instrument your Vision API calls with comprehensive logging. If you're facing production challenges, audit where your current architecture doesn't match the patterns discussed here. And if you're planning a new computer vision system, resist the temptation to build custom models first—let cloud APIs prove where custom solutions are actually needed.